The system assumes the moons have a spherical base. The system uses a geodesic meshing for the base sphere to guarantee each surface patch has the same area. The system uses this structure only as a computational grid for the procedural generation, the actual moon surface will be much smoother than the generation grid.

Geodesic sphere

Noise-perturbed geodesic sphere

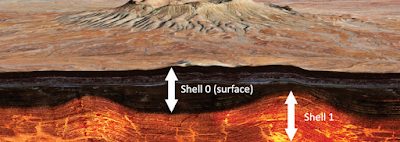

Starting from this base, the system will use a series of concentric shells. Each cell will determine the volumetric characteristics up to the next concentric shell. The outmost shell will provide the surface properties of the moon.

Multiple concentric shells

Each shell will be optionally distorted by a low-frequency 3D noise that is unique to the shell. If configured to be so, it is possible for an inner shell to overcome an outer shell.

Shell distortion

The preceding diagrams have exaggerated the distance between shells, and the magnitude of their distortion, to provide a better understanding of this constructive process. In practice, shells will be much closer to each other, and the magnitude of their distortion will be proportionally smaller.

The following sections will describe how the outmost shell is produced. The same principles will apply to the production of the inner shells, for this reason, the definition of inner shells will not be discussed in detail.

The procedural moon system requires both a real-time component and an offline component. The real-time component will be running in the player’s computer. The offline component will run in the game developer’s computers. The offline generation component will produce information that can be quickly augmented by the real-time component.

Each vertex in a spherical shell will be classified as belonging to one specific biome. A biome is a collection of surface properties, including, but not limited to: elevation, material distribution, instance placement, and material coloration.

Example of biome

The biome information contains entropy-rich features like craters, dry seas, surface cracks due to gravitational tides.

Large crater captured in biome information

The biome definition also contains planting rules, which will determine the location, frequency, and randomization of smaller features like rocks, boulders, overhangs, etc.

Rock instances over terrain

Each biome is be made of tile-able elements so the real-time component can apply the same information in multiple locations of the moon, and in other moons as well. The biome information will also be designed in a way that fast distortion and re-combination are possible, thus reducing repetitive and predictable patterns in the environment.

The system uses two types of biome tiles: Transitional and Isolated.

Transitional biome information will appear in regions where a biome is transitioning into a neighboring biome. Isolated biome information will appear in regions the system can guarantee there are no neighboring biomes.

These two distinct modes are needed because some large biome features like craters, can only be placed in areas where the biome is not transitioning into another biome type since biome transitions can affect the height profile and overall look of the terrain features.

The following simplified example shows a moon that uses three different biomes: Polar, Tropical, and Equatorial. The biomes are colored Red, Blue, and Green respectively.

A moon with three biomes

Patches, where there is an Isolated biome, appear in a solid color, patches with Transitional biomes show a blend of colors.

The system will use a set of pre-computed noises to introduce variation in the biome transition zones, creating interesting, unique transitions from one biome into another.

Procedural noise applied to biome transitions

The preceding images have exaggerated the size of each biome patch relative to the moon’s size to provide a better understanding of the biome patching technique. A single biome patch will cover an area of approximately 10km by 10km. A moon having 1,500 km radius will have a surface area of 30,000,000 km2. To cover its surface, it would take nearly 300,000 of these patches.

Biome grid resolution for a moon of 1,500 km radius

The biome grid resolution can lead to a very large number of biome patches. The system will not keep track of these individually since each patch’s location can be analytically computed, and for most of the patches, their biome assignment can be inferred from a high-order biome map.

High-order biome maps will provide the moon’s key characteristics when viewed from far away. The system will use these maps to generate additional detail for closer views, keeping the moon’s definition consistent when viewed at different scales.

High-order biome maps are 2D images that can fit the moon’s surface using a custom 2D parametrization. Each point of the map contains a numeric identifier for the biome that is prevalent in that location of the surface or internal shell.

2D biome map

The image above shows a map with four different biome types (blue, red, yellow and white). The image is wrapped around the sphere using a custom 2D parametrization. One possible parametrization is shown below:

2D parametrization for Biome Id map

In addition to the biome Id map, the system will allow other maps. For instance, maps containing elevation and surface color.

Elevation, tint, and a far-range rendering of the moon

A single pixel in each image may cover 4 km2, making them inexpensive to produce. These elevation and tint maps can be either procedurally generated or artist-made. For a project containing only dozens of moons, and where each moon is required to have rich, unique natural properties, this is a stage where artist input is likely to yield the best returns.

The following image captures the entire approach to generating shell surface elevation and other properties:

The three scales used for moon construction

A moon will be made of at least one spherical shell. In case there are multiple shells, the system will extrude inner shells based on their maximum radius and the shell’s height function, which is obtained from the same multi-scale process described in the previous sections.

For each shell, the moon designer will provide a high-order biome distribution map, biome definitions for the biomes appearing in this map, and material definitions for the materials appearing in the biome.

The system accepts “air” as a valid material, which can be used to create cavities within any shell.

A cross-section of the moon terrain, displaying two different shells

A single shell will also be a volumetric object, and its depth will be defined by a stack of materials. The material stack information will be contained in the biome.

A material stack made of 6 materials

Since underground materials will rarely become exposed, they can be expressed at a much lower resolution than biome surface materials and the use of local procedural noises will not be noticed by the player.

The material stack functionality may be sufficient to place as some materials in the stack can be sufficiently rare. The biome designer will be able to configure the abundance and occurrence pattern of any material in the stack.

The procedural generation is executed by both GPU shaders and CPU voxel algorithms.

The shader will compute a fragment color for each of the three scales, and it will blend these samples based on the distance from the camera to the fragment.

Any detail that is small enough to not register in the geometry, but that still contributes to the perceived complexity of the surfaces, will be captured by normal maps generated in real-time from the procedural definition of materials, biomes or the high-order moon definition maps. This technique will keep low polygon counts for the scene.

The trade-off is that biome and high-order definition maps will have to be resident on GPU while the moon is in view. It is possible to selectively upload only the mipmaps necessary for rendering the current moon scale. The total amount of GPU memory required at any time will be reduced to a minimum by streaming mipmaps in and out of GPU as the viewer position changes.

When features become large enough due to their proximity to the camera, they begin to appear in the geometry output by the real-time voxel generation. This also applies to any sections of the moon that may have been terraformed or mined by the players. If the changes are large enough they could be perceived from orbit, as Voxel Farm’s adaptive scene manager will increase the LOD for any areas with modifications deemed important by the application.